By Nick Butler

Tags: Agile , Development

Reducing batch size is a secret weapon in Agile software development. In this post we look at what batch size is, how small batches reduce risks, and what practical steps we can take to reduce batch size.

We must develop software quicker than customers can change their mind about what they want.

Mary Poppendiek

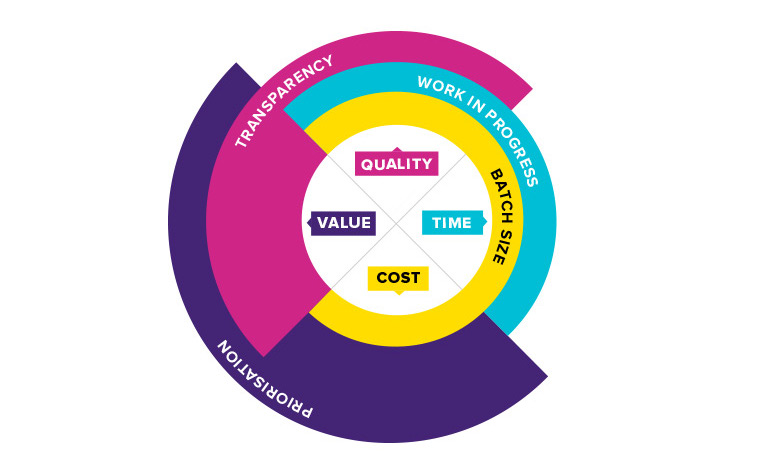

As we saw in our earlier posts, the four key risk management tools for Agile software development — prioritisation, transparency, batch size and work in progress — each reduce one or more of the most common risks: risks to quality, time, cost and value.

Get your complete guide to

Project risk management with Agile

When we reduce batch size we get our products to market faster and get to discover quality issues early and often. As a result, we reduce the risk that we’ll go over time and budget or that we’ll fail to deliver the quality our customers demand.

Batch size is the amount of work we transport between stages in our workflow. Importantly, we can only consider a batch complete when it has progressed the full length of our workflow, and it is delivering value. Take building a new mobile application as an example. If we started by completing all of the analysis before handing off to another team or team member to begin developing the application we would have a larger batch size than if we completed the analysis on one feature before handing it off to be developed. And the batch will only be complete when customers are able to use and enjoy the application.

The needs of the market change so quickly that if we take years to develop and deliver solutions to market we risk delivering a solution to a market that has moved on. The software industry has been plagued by late discovery of quality and needs.

Projects may be many months or even years in progress before a single line of code is tested. It is not until this late stage that the quality of the code, and the thinking that inspired the code, becomes visible. Historically this has happened too late. We have over-invested in discovery and analysis without being able to measure quality. This leads to project overruns in time and money and to delivering out-of-date solutions.

Batch size is the amount of work we do before releasing or integrating. Often projects work with a number of different batch sizes. There may be a batch size for each stage (funding, specification, architecture, design, development etc), a batch size for release to testing and a batch size for release to the customer (internal or external).

In the context of an Agile software development project we see batch size at different scales. There is the user story or use case, which is the unit of work for the team. Stories inherently have a limited batch size as they are sized so they can be delivered in a single iteration. Stories may be the constituent parts of larger features, themes or epics. In a scaled Agile environment we may also see portfolio epics also as a batch. If the Agile team is a Scrum team we also have the Sprint as a batch of work. Again, small batch size is built in because we aim for short Sprints and only bring in the stories we estimate we can complete in that Sprint.

For individuals working on a team, they may take their work batch (story or use case) and break it down further by continuously integrating their work, every few minutes or hours.

Then there is the release, which is the unit of work for the business.

In The Principles of Product Development Flow, his seminal work on second generation Lean product development, Don Reinertsen describes batch size as one of the product developer’s most important tools.

Reinertsen points out that it can feel counterintuitive to reduce batch size because large batches seem to offer economies of scale. Analysing the economics of batch size, however, reveals that these economies are significantly outweighed by the benefits of reducing batch size.

As batch size increases so does the effort involved and the time taken to complete the batch. This causes a number of key issues. One, transparency is reduced, which can lead to late discovery of issues, of cost and of value. Two, integration effort is increased. The amount of time and effort needed to integrate the new batch into the existing code base rises as the size of the batch increases. Three, value is delayed. Fourth and lastly, variability increases.

Let’s look at each of these issues in more detail.

There are two main reasons larger batches reduce transparency.

Because they take longer to complete, large batches delay the identification of risks to quality. With a year-long batch, we only discover the quality of our work at the end of that year. In week-long batches, we discover the quality every week. This means we get to influence that quality every week rather than having to deal with historical quality issues. Because security is a key quality factor, reducing batch size is an important way to manage security risks in Agile software projects.

Moreover, large batches tend to have more moving parts. The interrelations between these different parts make the system more complex, harder to understand and less transparent.

These two issues feed into each other. It’s harder to identify the causes of historical quality issues with a big batch project because it’s hard to disentangle the multiple moving parts.

As a result you also lose transparency of capacity. It’s not until you have released the batch, and remediated the historical quality issues, that you can quantify how much work you have the capacity to complete over a set period.

It’s common for large batch projects to become too big to fail. As a result the business decides to add whatever resource is needed to get the project over the line. Teams are often pressured to work longer hours not charged to the project. This may complete the project but disguises the actual resource required. It also results in burnout and consequent staff retention issues.

The complexity created by multiple moving parts means it takes more effort to integrate large batches. The bigger the batch, the more component parts, and the more relationships between component parts.

Take debugging for example. Each line of code you add increases the number of relationships exponentially, making it exponentially harder to identify and fix the cause or causes of a bug.

We see similar increases in effort when we come to deploy and release.

Larger batches take longer to complete, and therefore longer to deliver value. Value delayed is a cost to the business.

This delay affects both the learning benefits you get from, for example, beta testing an increment of potentially shippable software, and the value you provide your users when you deploy software.

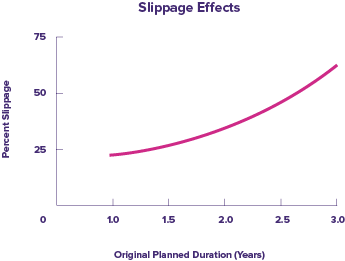

Compounding this delay is the increased likelihood of slippage, as cost and completion targets get pushed out. Reinertsen reports that large batches increase slippage exponentially. He says one organisation told him their slippage increased by the fourth power — they found that when they doubled the project duration it caused 16 times the slippage.

We’ve found that by tightly prioritising the functionality of our products we’ve been able to reduce batch size and speed up the delivery of value. Learn more in our case study on reducing risk with Agile prioritisation on the IntuitionHQ project.

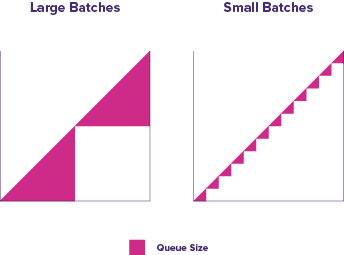

As large batches move through our workflows they cause periodic overloads. Reinertsen compares it to a restaurant. If 100 customers arrive at the same time then the waiting staff and kitchen won’t be able to cope, resulting in big queues, long waits and disgruntled customers. If, on the other hand, the patrons arrive in smaller groups, there will be sufficient resource available to complete each order in good time.

The same applies to software development. If you’ve worked on a new version of an application for a year before you test it, your testing team will be overloaded and will cause delays.

Fill in the table below to see how your project or organisation is doing with batch size.

Download your printable batch size evaluation checklist (PDF)

| Practice | Yes/No |

| Batch size is monitored at all stages (e.g. funding, planning, discovery, prototyping, defining requirements, development, testing, integration, deployment) | |

| Batch size is controlled at all stages | |

| Teams break work down into the smallest sensible batch sizes | |

| Teams deliver work in increments of potentially shippable software | |

| Teams complete work in vertical slices | |

| Test Driven Development is employed | |

| Teams integrate early and often | |

| Teams deploy frequently | |

| Communication is in small batches, ideally face-to-face and in good time |

Add up one point for every question to which you answered ‘yes’.

0-2 points: Batch size is not being reduced or measured. There are a number of small but effective practices you can implement to start getting the benefits of reducing batch size.

3-6 points: You are reducing and/or measuring batch size. You may already be seeing the benefits of reducing the size of the batches you work in, but you have the opportunity to make further improvements.

7-9 points: You have reduced your batch sizes and are likely to be seeing the benefits! Can you take it to the next level?

The ideal batch size is a tradeoff between the cost of pushing a batch to the next stage (e.g. the cost of testing a new release) and the cost of holding onto the batch (e.g. lost revenue).

As this graph shows, the interplay of transaction cost and holding cost produces a forgiving curve. You don’t need to be precise. It’s hard to make batches too small, and if you do, it’s easy to revert. This means we can use the following heuristic:

Tip: Make batches as small as possible. Then halve them.

In order to make small batches economic we need to reduce the transaction costs. Many of the practical tools for reducing batch size achieve this by increasing transparency, making communication easier, precluding the need for handoff, and automating and hardening processes.

Let us now look at some of the key tools for managing batch size in Agile. We can:

Having larger stories in an iteration increases the risk that they will not be completed in that iteration.

To minimise the size of our user stories we need to zero in on the least we can do and still deliver value. Following the INVEST mnemonic, our stories should be:

Independent

Negotiable

Valuable

Estimable

Small

Testable

This means we should always write small stories from scratch. Sometimes, however, our planning for the iteration will identify a story that is too large. In this instance we need to split the story to isolate the must-haves from the nice-to-haves. Nice-to-haves can include:

When looking for ways to split a story we can check for:

Often large stories result from a desire to provide our users with the best possible experience from day one. But giving them immediate value and improving on this incrementally provides greater value overall.

Richard Lawrence has produced a detailed and useful set of resources on how to split user stories.

One specific way we can focus on the smallest increment of value is by working on vertical slices of the system.

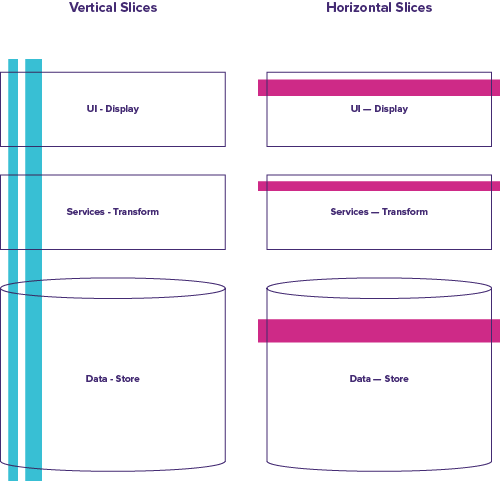

Development of a system that is divided into multiple architectural layers (such as Data, Services and User Interface) can proceed one layer at a time, or it can proceed across all the architectural layers at once; we can work horizontally or vertically.

It is common for projects to start at the bottom, completing all the work required to build the full product at each level. The work is then handed off to the team that specialises in the next layer. This means that you can only deliver value when the top layer has been completed. It also results in a very large batch, with bottlenecks at each stage.

The alternative is to have a single cross-functional, multi-disciplinary team and work on all the layers of a tightly-focussed piece of functionality. This will be a potentially shippable increment of our software that can either be deployed or beta tested. We can facilitate this by setting up a DevOps environment that integrates development and IT operations.

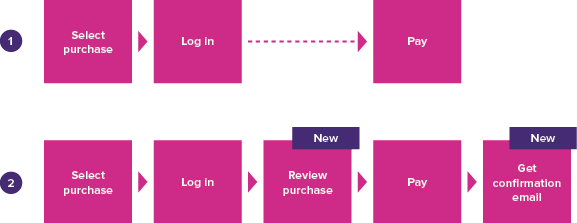

As an example of a vertical slice, you might start a basic e-commerce site with this minimal functionality.

Select purchase > Log in > Pay

Later stories can then expand this to:

Select purchase > Log in > Review purchase [new] > Pay > Get confirmation email [new]

As we add new functionality we can refactor or build reusable components to keep the code clean and efficient.

In contrast, if you work in horizontal slices, you might design and build the database you need for a full solution then move onto creating the logic and look and feel.

We have found that splitting work between multiple, separate teams significantly increases project risk, especially when teams are from different organisations. Causes include increased coordination and integration of disparate work processes and priorities, more dependencies and greater likelihood of unexpressed assumptions. The bigger the batch, the more these factors come into play.

For larger projects, you need to find a balance between intentional architecture and emergent design. Some architectural choices are best planned, especially when particular options offer clear advantages or to make it easier for teams and tools to work together. In other instances, it is best that the architecture evolves as we learn more about user needs.

In Test Driven Development (TDD) you first write a test that defines the required outcome of a small piece of functionality. You then write only the code needed to fulfil the test. Next, you run all tests to make sure your new functionality works without breaking anything else. Lastly, you refactor to refine the code.

Focusing on small units and writing only the code required keeps batch size small. This makes debugging simpler. It also makes it easier to read and understand the intent of the tests. Additionally, focusing on the outcome keeps the user needs front of mind.

We can further embed user needs in the process via Behaviour Driven Development (BDD) and Acceptance Test Driven Development (ATDD). These make the business value more transparent and simplify communication between the business product owners, developers and testers. In BDD we start with structured natural language statements of business needs which get converted into tests. In ATDD we do the same thing with acceptance criteria.

To keep the batches of code small as they move through our workflows we can also employ continuous integration. Once we’ve refactored code and it passes all tests locally we merge it with the overall source code repository. This happens as frequently as possible to limit the risks that come from integrating big batches.

Even if we integrate continuously we may still get bottlenecks at deployment. We may also discover that our work no longer matches the technical environment or user needs. Indeed, we can define a completed batch as one that has been deployed.

What we find in practice is that the more frequently we deploy, the better our product becomes. In Build Quality In, Phil Wills and Simon Hildrew, senior developers at The Guardian, describe their experiences like this:

“What has been transformative for us is the massive reduction in the amount of time to get feedback from real users. Practices like Test Driven Development and Continuous Integration can go some way to providing shorter feedback loops on whether code is behaving as expected, but what is much more valuable is a short feedback loop showing whether a feature is actually providing the expected value to the users of the software.

“The other major benefit is reduced risk. Having a single feature released at a time makes identifying the source of a problem much quicker and the decision over whether or not to rollback much simpler. Deploying much more frequently hardens the deployment process itself. When you’re deploying to production multiple times a day, there’s a lot more opportunity and incentive for making it a fast, reliable and smooth process.”

Having your whole team working together means you can talk face-to-face, facilitating small batch communication with real-time feedback and clarification.

It is especially important to have the Product Owner co-located with the team. If the Product Owner isn’t available to clarify requirements they tend to accumulate. This increases batch size, as well as slowing down the work. If physical co-location isn’t possible then virtual co-location is the next best thing. This means that Product Owners ensure they are responsive and available, even if they are not physically present.

Co-location can also mitigate risks caused by having multiple, separate teams. Again, if physical co-location is impossible, we can maintain small batch communication by bringing teams together for regular events such daily standups, and by setting expectations for responsiveness and availability.

Big batch projects inherently increase risk by increasing the investment at stake; putting more time and money into the project makes the consequences of failure all the greater.

Conversely, smaller batches reduce the risk of a project failing completely. Reinertsen explains the mathematics like this. If you bet $100 on a coin toss you have a 50/50 chance of losing everything. But if you split that into twenty $5 bets, the odds of losing it all are 1 over 2 to the 20th. That’s one chance in a million.

When we reduce batch size we get feedback faster. This limits the risk we will waste time and money building something which doesn’t meet users’ needs, or which has many defects. Even if our product delivers what our initial discovery work specified, in a big batch project we risk releasing software that has been rendered obsolete by changes in technology and user expectations.

Practice makes perfect, and smaller batches also produce shorter cycle times, meaning we do things like testing and deployment more regularly. This builds expertise, making delays or errors less likely.

Smaller batches also reduce the risk that teams will lose motivation. That’s because they get to see the fruits of their labours in action. Effort is maintained at a consistent and sustainable level, reducing the overwhelming pressure that tends to build at the end of big batch projects.

Large batches, on the other hand, reduce accountability because they reduce transparency. In a big batch, it’s harder to identify the causes of delays or points of failure. As motivation and a sense of responsibility fall, so too does the likelihood of success.

Because we deliver value sooner, the cost-benefit on our project increases. This reduces the risk of an application becoming de-prioritised or unsupported.

While working in small batches seems counterintuitive because you lose economies of scale, the benefits far outweigh the downsides.

Moreover, the formula for determining the ideal batch size is simple. We make them as small as we can. Then we halve their size again.

We do this because small batches let us get our products in front of our customers faster, learning as we go. As a result they reduce the risk that we’ll go over time and budget or that we’ll deliver low quality software.

By ensuring we deliver working software in regular short iterations, small batches are a key tool in our Agile software development arsenal.

How to split a user story — Richard Lawrence

How reducing your batch size is proven to radically reduce your costs — Boost blog

Why small projects succeed and big ones don’t — Boost blog

Beating the cognitive bias to make things bigger — Boost blog

Test Driven Development and Agile — Boost blog

| Guidance | Case studies & resources |

|---|---|

| Introduction to project risk management with Agile | Agile risk management checklist — check and tune your practice |

| Effective prioritisation | |

| Reduce software development risk with Agile prioritisation | Reducing risk with Agile prioritisation: IntuitionHQ case study |

| Increasing transparency | |

| How Agile transparency reduces project risk | Risk transparency: Smells, Meteors & Upgrades Board case study |

| Limiting work in progress | |

| Manage project risk by limiting work in progress | Reducing WIP to limit risk: Blocked stories case study |

| Reducing batch size | |

| How to reduce batch size in Agile software development | Reducing batch size to manage risk: Story splitting case study |